I do interesting things with computers, code, comedy, music and video, then I travel all over the world and tell people about it. I provide software training and consultancy through my company Ursatile. I'm a keynote speaker, I'm a Microsoft MVP, I created Rockstar, an esoteric programming language that started as a joke and ended up in Classic Rock magazine, and I own the best web address in the history of the internet.

About Me

Who I am and what I do, plus speaker bios and photographs to use if you'd like details for your event website.

Speaking

I speak at technology conferences, meetups and community events all over the world.

Projects

Tech things I've done, including the Rockstar programming language.

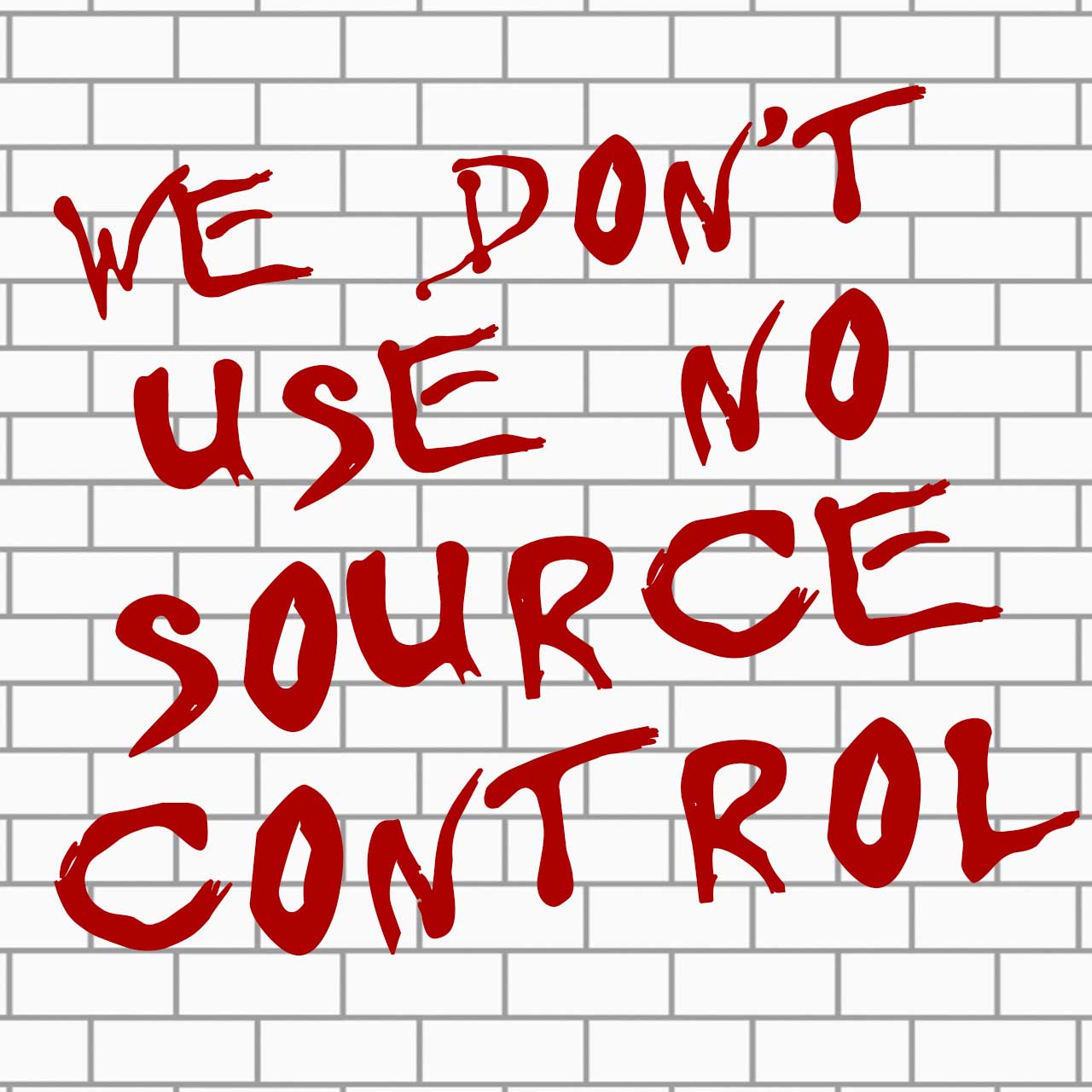

Musical Parodies

I write and perform musical parodies of classic songs. You'll find some of them here.